Da Shop’s Greatest Hits: Volume 1

The staff at Da Shop: Books + Curiosities round up their most memorable reads of 2024 in two parts. This is part one.

As we approach the end of another year, our booksellers and staff at Da Shop: Books + Curiosities and our parent company Bess Press are reflecting on our most memorable reads of 2024. For the next two months, we will be eagerly sharing the books that have made a lasting impression on us, from lyrical novels to a Pasifika poetry anthology to a beloved middle grade read by a leading Hawai‘i author. So come along as we recap our year of reading, and maybe get some inspiration for that final read of the year.

SEE ALSO: 6 Books That Left a Big Impression on Da Shop Staff in 2023

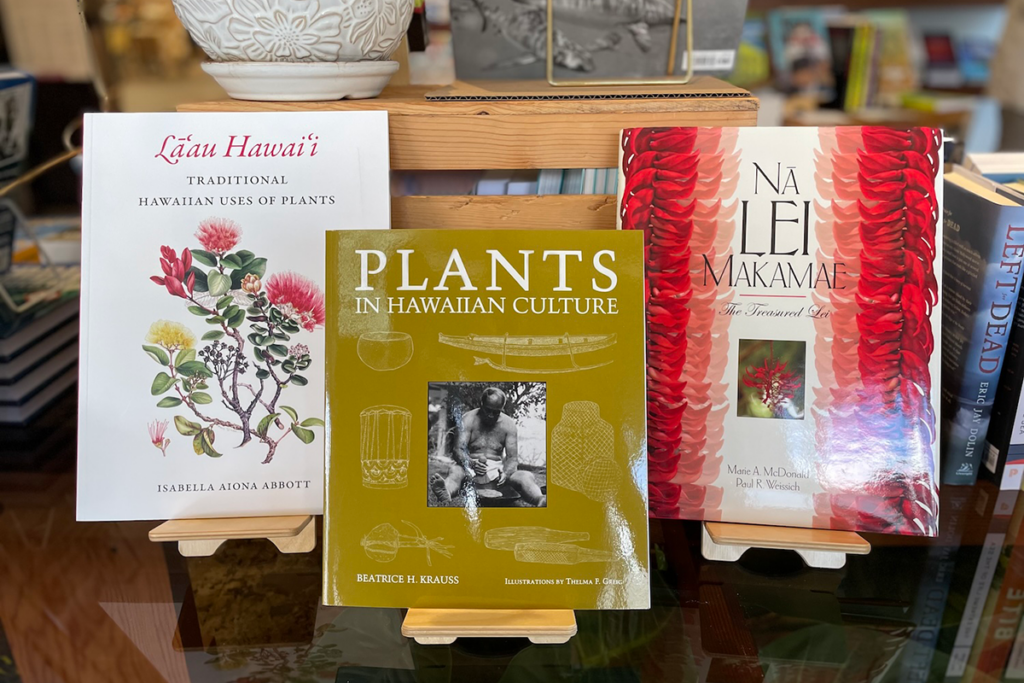

Photo: Courtesy of Da Shop: Books + Curiosities

Clairboyance

By Kristiana Kahakauwila

Selected by Kristen

I’m going to tell you a secret about Clairboyance: the story hook, Clara’s sudden ability to read boys’ thoughts, delves so much deeper than one might assume. This is the hallmark magic of beloved Kanaka Maoli writer Kristiana Kahakauwila, who is as generous and caring to her middle grade readers as she is to her characters: honoring the complexity of feeling conflicted, unmoored and longing for connection. Kahakauwila holds a sacred space for their wending journeys that unite in a cathartic resolution of cultural, communal, and familial anchoring intimately situated in this ‘āina and people.

I cried, no, bawled, reading this book, which may have caused some initial hesitation from my 10-year-old daughter when I shared it with her (oops). My daughter has since read it many times over: I often see Clairboyance tucked under her arm or in her bag and I smile, knowing this story continues to nourish us both.

Photo: Courtesy of Da Shop: Books + Curiosities

Enter Ghost

By Isabella Hammad

Selected by Megan

How to capture what this novel means to me? Enter Ghost by Isabella Hammad is a powerful rendering of present-day Palestine and evinces the significance of art-making under the pressures of war and occupation. The book follows actress Sonia Nasir, whose brief return to Haifa is interrupted by the request that she play Gertrude in a West Bank production of Hamlet. Written in compelling, lyrical, and masterfully restrained prose, Enter Ghost depicts Sonia’s involvement in theater as political protest and her poignant journey of self-discovery in her ancestral home. This is a novel with staying power, one I suspect I will return to again and again.

Photo: Courtesy of Da Shop: Books + Curiosities

The Postcard

By Anne Berest

Selected by Jen

An anonymous postcard arrives with nothing else written except four first names of family members. This spurs author Anne Berest to figure out who sent it and why. Between the book covers, I get a glimpse into the lives of her great grandparents, Ephraïm and Emma, her grand aunt, Noémie, and grand uncle, Jacques. It literally is a “glimpse,” as they were all murdered in the Holocaust. Their lives, their hopes and dreams, cut short for no other reason but for being Jewish.

Many emotions come up while reading this book, but gratitude is the one that stays. I am grateful to Anne for sharing their stories, as nobody wants to be forgotten. And I am grateful for the reminder that I still have time and the opportunity to add many chapters to my own story, so I better not waste it.

Photo: Courtesy of Da Shop: Books + Curiosities

We The Gathered Heat: Asian American and Pacific Islander Poetry, Performance, and Spoken Word

Edited by Franny Choi, Bao Phi, No‘u Revilla and Terisa Siagatonu

Selected by David

Poetry is not a genre I typically go for, but this title is a searingly good read. It is packed with power in short-form, personal narrative from a variety of leading authors, poets, and creatives. If you are a person who wants to understand people, the world, identity and the mere conscience of what being Asian American and Pacific Islander is today, then this is your book.

The collection of works is editorially curated by standout poets Franny Choi, Bao Phi, No‘u Revilla and Terisa Siagatonu and reads like a deeply personal dinner conversation, where you laugh, cry and yell. It is a brilliantly crafted publication that includes an immense amount of writing talent that pulls from the past, connects readers with today and casts a possible vision of tomorrow for AAPI voices.

Da Shop: Books + Curiosities, 3565 Harding Ave., open Wednesday through Sunday from 10 a.m. to 4 p.m., (808) 421-9460, dashophnl.com, @dashophnl